TIL: Ferret and FQL

Where I explore Ferret, a cool declarative approach to web scraping!

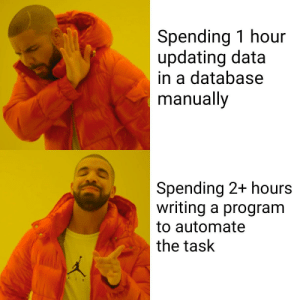

The other day I was trying to extract the directory of a particular office in a Mexican university. The directory consists of a few simple HTML tables, but with over 80 total records it was more than I'm OK copy-pasting.

Python being my strongest programming language, I normally default to Scrapy or Beautiful Soup but this seemed like overkill. I wanted a simple tool that I could declaratively feed the URL and CSS selectors for the fields I was interested in, and then emits the inner text values as a nice CSV or JSON.

My initial search lead me to the webscraper.io browser extension. It was almost what I wished for, but I didn't like the UI too much and after 5 minutes trying to figure out how to combine multiple selectors in a single record, I gave up.

I then went to search for web scraping on GitHub, and one of the first results was ferret. Immediately, the home page mentions a declarative query language and this seems to be was I was looking for!

Ferret is a Go library, CLI and parser for its own query language (FQL).

The Ferret Query Language

Coming from a data and backend engineering background, I'm familiar with SQL and other query languages so the initial description of FQL looked interesting:

FQL is mainly a declarative language, meaning that a query expresses what result should be achieved but not how it should be achieved.

The syntax of FQL queries is different to SQL, even if some keywords overlap. Nevertheless, FQL should be easy to understand for anyone with an SQL background.

The language comes with a very complete standard library of functions. Some of the builtin functions are reminiscent of SQL: TRIM, DATE_ADD, etc.

There are also math, http and other functions.

Executing FQL files with the CLI

After installing the CLI, I started playing with a few queries and was pleasantly surprised by how little code I had to type. The query that does what I needed is shorter and arguably more readable than the equivalent Python code would've been, especially for someone more familiar with a declarative language like SQL:

/* Open an HTML page

https://www.montferret.dev/docs/stdlib/html/#document

*/

LET doc = DOCUMENT("https://cbi.izt.uam.mx/index.php/cbi/directorio")

/* Iterate elements that match a CSS selector

https://www.montferret.dev/docs/stdlib/html/#elements

*/

FOR section IN ELEMENTS(doc, "div.g-owlcarousel-item")

/* Extract text from a heading and remove surrounding whitespace.

https://www.montferret.dev/docs/stdlib/html/#elements

*/

LET oficina = TRIM(INNER_TEXT(section, "h4"), "\n +")

FOR row IN ELEMENTS(section, "table > tbody > tr:not(:first-child)")

RETURN {

oficina: oficina,

cargo: INNER_TEXT(row, "td:nth-child(1)"),

responsable: INNER_TEXT(row, "td:nth-child(2)"),

cubiculo: INNER_TEXT(row, "td:nth-child(3)"),

email: REGEX_SPLIT(TRIM(INNER_TEXT(row, "td:nth-child(4)")), "[\n\t ]+"),

}

The output of executing the FQL from the CLI is a JSON array, so it's easy to save the results and process them downstream with other tools. For example, the following SQLite query counts the number of workers in each office:

select

json_extract(value, '$.oficina') as "Office",

count(*) as "Workers"

from json_each(readfile('./directorio.json'))

group by 1

order by 2 desc;

The following end-to-end pipeline involves only two commands:

Which briefly explained

- scrapes the directory

- saves the results to a JSON file

- reads the JSON array in SQLite

- outputs the result as a Markdown table

The final result is

| Office | Workers |

|---|---|

| Coordinadores de Posgrado | 13 |

| Coordinación Divisional de Docencia y Atención a Alumnos | 11 |

| Departamento de Química | 10 |

| Departamento de Física | 10 |

| Coordinadores de Licenciatura | 10 |

| Departamento de Matemáticas | 9 |

| Departamento de Ingeniería Eléctrica | 7 |

| Coordinadores de Laboratorios de Docencia | 6 |

| Departamento de Ingeniería de Procesos e Hidráulica | 5 |

| Coordinadores del Tronco General | 5 |

| Secretaría Académica | 3 |

| Dirección | 3 |

| Coordinadores de Cursos de Apoyo a otras Divisiones | 2 |

Further exploration

There's more capabilities to Ferret that may be worth exploring in more complex tasks:

pyferis a Python wrapper around Ferret's C library, so it might be worth seeing how well it integrates in larger Python projects.- Handling dynamic pages with Chromium in browser mode and the Ferret worker.